History of Google's Updates

Before the World Wide Web and even before its predecessor, the File Transfer Protocol, we were already thinking of data storage and efficient search engines to access archived information. Vannevar Bush, in his book entitled As We May Think, had an idea for what today would be considered a superior search engine as early as 1945. Believing that systems of indexing information should work as the human mind does, by association, he proposed the creation of an associative information storage and retrieval system, which he called a "memex". This basic idea is the pioneering concept that later gave birth to modern search engines, from Archie (1990), the grandfather of all search engines, to Google (1996), the reigning king of all search engines.

A web master's success is arguably parallel to his understanding of a search engine's ranking system and no search engine's ranking algorithm has ever been as coveted as Google's PageRank. Google's unequalled search engine was developed as part of a research project by two Stanford Ph.D. students, Larry Page and Sergey Brin, who sought to determine a web page's importance by analyzing the quantity and quality of backlinks. PageRank is the algorithm Page and Brin developed to convert backlink data into a numerical measure of a web page's importance. Never before had backlinks been used as a variable in web page indexing, but Page and Brin found out early on that if they developed a search engine based on PageRank, it would far outrank the keyword-frequency technique used by most search engines of the day.

Association, as Vannevar Bush hypothesized in 1945, is the most efficient data-retrieving technique. PageRank's use of backlinks works by association because backlinks provide a reliable indication that a website exists in a certain topical community. This technique ensures that a search query is met with the most relevant information and it is this simplicity that makes Google the most competent search engine.

As web masters and bloggers know, developing a strong search engine optimization plan is vital to reach a profitable position on Google's SERPs. However, as Google's software engineers know, many people use black-hat SEO climbing tactics to reach profitable positions unfairly. These low-quality, ad-filled, keyword-stuffed, spam websites climb the ranks to the expense of quality websites cheated of a fair game. Self-described as a "company that does good things for the world", Google developed the Panda, Penguin, and Hummingbird algorithms as updates to it's search algorithms. These are unanimously meant to ensure that only the best-quality, inquiry-relevant information reaches top rank positions.

Google Panda Updates

Main target: Low-quality content.

Google Panda was first implemented in February of 2011 as a response to the outcry against content-farm sites sneaking into top-listings with poor-quality content. This update to Google's search engine algorithm was the first to have a high impact on search queries in the United States. Consistent with the intention, this update was originally called "Farmer Update" and mainly targeted sites plagued with advertisements, plagiarized content, or little amounts of original content. In other words, shallow websites prioritizing profit over consumer needs. Over 26 Panda updates have been implemented since 2011, discouraging search engine optimization techniques.

Google Penguin Updates

Main target: Low-quality links.

Google Penguin was implemented in April of 2012 with the same intention (different target) as Google Panda. Whereas Google Panda targeted the low-quality content on different pages of a website, Google Penguin sought to penalize websites using black-hat SEO techniques to crawl to high-ranking positions on Google's SERPs. The Penguin algorithm specifically targeted web pages containing unnatural links, keyword-stuffing, doorway pages, and basically any form of black-hat SEO tactics. As opposed to Google Panda, which penalizes the overall content of a website, Penguin only affects individual pages. It is much easier to recover from a Penguin hit than a Panda hit, the idea behind Penguin being, "play a fair game".

Google Payday Loans Update

Main target: Spam.

Google first implemented the Payday Loans update to its search algorithm in June of 2013. Named after spam-vulnerable search queries such as "payday loans", "bad credit", "insurance" and related topics, the Payday Loans update specifically targeted spammy websites by following the search queries infamous for producing such results.

Google Hummingbird Update

Main target: Understanding intent.

Google Hummingbird, implemented first in August 2013, was different compared to Panda, Penguin, and Payday Loans because rather than seeking out low-quality pages or pages plagued by black-hat SEO techniques, it sought to improve Google's PageRank algorithm by taking into account the entirety of a search query, analyzing context as well as specific keywords. Hummingbird, rather than producing results related to the inquiry, seeks to understand a user's intention for making the inquiry. Thus, the best way to optimize a webpage according to Hummingbird, is to provide relevant content that not only provides factual information about a query, but meets the user's needs on a subjective level.

Google Updates and the Web Master

The intention of the Google updates is clear: In order for it to maintain its spot as the best search engine, Google is constantly seeking ways to improve ranking algorithm. Already sophisticated when it was first launched in 1996 as a research project, Google has only improved over the years, keeping ahead of web vultures by demanding real quality from top-ranking websites.

As a web master, it is important to understand Google's perspective of a website. According to Google's algorithmic history, the importance of a website is determined by a few key factors: Links coming into your page based on the sponsoring page's rank and importance, the relevancy of your page to a search query based on keywords and overall context within your text content, and the associative relevancy of your page, determined by keywords other sites use to link to your page.

Put simply, Google is looking for quality, a characteristic that will become notable with future updates.

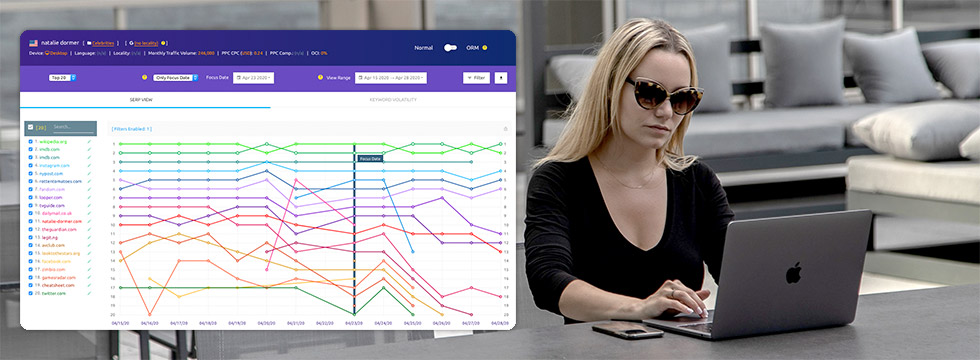

Enter SERPWoo

At SERPWoo, our goal is to construct a day to day breakdown of ranking on the search engine results pages (SERPs) for web masters looking to get a better understanding of what websites search engines prefer. Learn more by visiting our About page.

Checkout SERPWoo's Features

Get a list of our major and unique features which professionals use every day to help their online marketing campaigns succeed: Features